Background Summary

There are many blog posts out there that attempt to cover this topic in detail. Microsoft has provided rudimentary documentation on this subject since early 2015. Unfortunately none of the resources (Blogs/Microsoft) cover this topic in enough detail to help you actually accomplish this task.

The scenario is that you’ve got an application in PaaS or IaaS deployed in distinct separate Azure Regions (Locations) and want to provide network connectivity between those Regions. Remember, Region= Location. The region / location terminology continues to be confusing between the technical and marketing documentation.

Before consuming this post the following Azure MSDN article MUST be understood. Even better, it should have been accomplished by the reader as we are going to build upon the basic principles contained within.

Configure a VNet to VNet Connection (April 14,2015): https://msdn.microsoft.com/en-us/library/azure/dn690122.aspx

To be honest, if the above tasks hasn’t been completed successfully – in a Sandbox Azure Subscription – don’t proceed any further until you have or this will lead to nothing but frustration.

Scenario

Let’s paint a clear scenario before we get started. We have 3 Azure Virtual Networks (VNETs) as follows:

- VNET1 10.1.0.0/16 (East-US)

- Subnet-1 10.1.0.0/19 or whatever you want.

- 10.1.32.0/29 <– Don’t forget to add a Gateway Subnet!

- VNET2 10.2.0.0/16 (Central-US) <—This will become our HUB Network (aka Dual S2S VNet)

- Subnet-1 10.2.0.0/19 or whatever you want.

- 10.2.32.0/29 <– Don’t forget to add a Gateway Subnet!

- VNET3 10.3.0.0/16 (West-US)

- Subnet-1 10.3.0.0/19 or whatever you want.

- 10.3.32.0/29 <– Don’t forget to add a Gateway Subnet!

I’ve chosen VNet2 in Central-US as the HUB network simply to help you visualize this. Central-US will need to connect to both East-US and West-US, while there will be no connectivity between East-US and West-US directly.

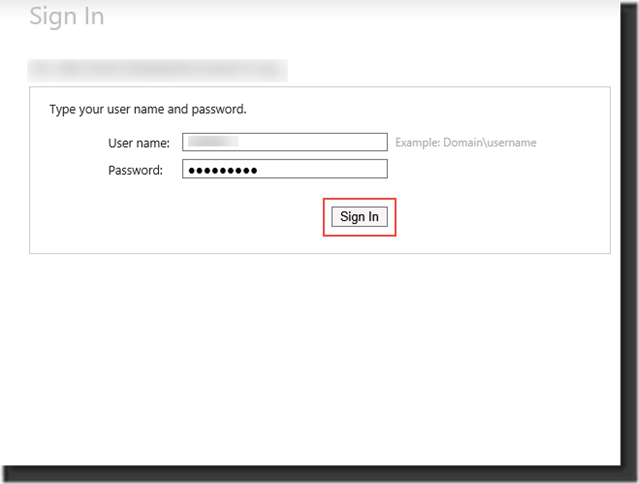

Step 1

After you have created this three (3) VNets using either the Azure Management Console or PowerShell, the following steps must be taken – in order – to achieve our goal.

- Create VNET1-Local 1.0.0.1 10.1.0.0/16 as a Local Network

- Create VNET2-Local 1.0.0.1 10.2.0.0/16 as a Local Network

- Create VNET3-Local 1.0.0.1 10.3.0.0/16 as a Local Network

When creating these Local Networks in the Azure Management Console, simply specify a dummy address like 1.0.0.1 for the Gateway initially. We will come back and edit/update these dummy Gateway addresses with the real IP addresses once Azure finishes provisioning them.

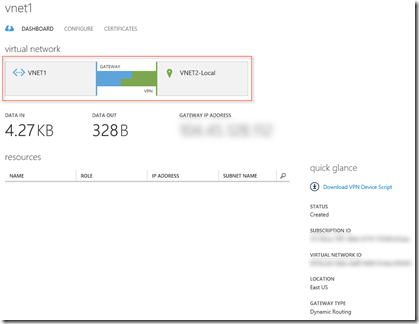

Configure VNET1 –> VNET2-Local w/Gateway Subnet (1.0.0.1), AND VNET2 –> VNET1-Local w/Gateway Subnet (1.0.0.1). (Reciprocals of each other.) Remember? VNET1 or East-US points to VNET2 or Central-US and visa versa if you want packets to flow back and forth between the two networks! This is no different that what you did following Configure a VNet to VNet Connection (April 14,2015): https://msdn.microsoft.com/en-us/library/azure/dn690122.aspx earlier.

Create, in the Azure Management Console, the Dynamic Gateway’s for both of them. These cannot be Static! Once these are created, it can take 30 minutes for these to complete, you’ll want to update the VNET1-Local/East-US Gateway IP address for VNET1, VNET2-Local/East-US Gateway IP address for VNET2 replacing the dummy values we used earlier of 1.0.0.1.

As before following Configure a VNet to VNet Connection (April 14,2015): https://msdn.microsoft.com/en-us/library/azure/dn690122.aspx earlier. Do not bother to connect the IPSEC keys just yet. Take a breath and pat yourself on the back!

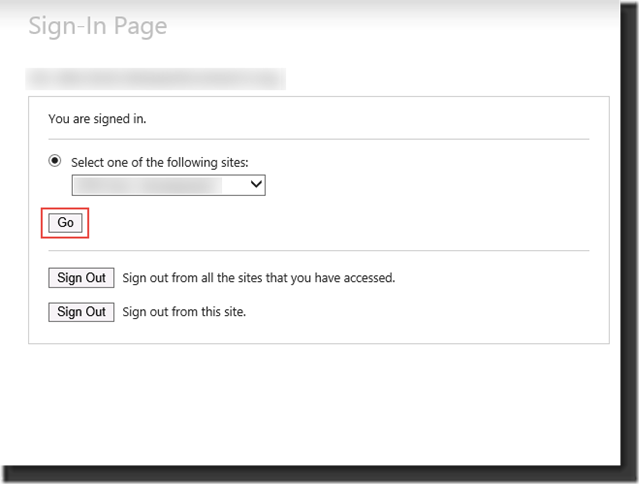

Step 2

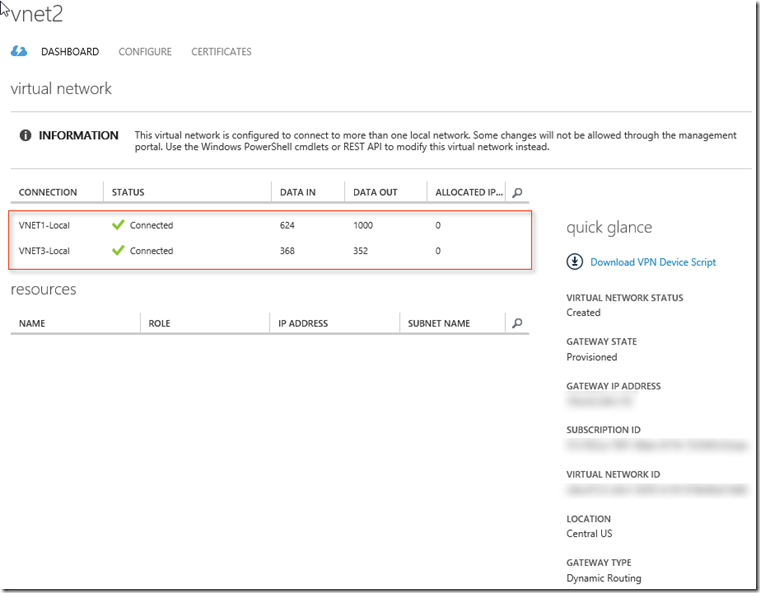

Now we’re going to expand this to include VNET3/West-US ALSO connecting to VNET2/Central-US making VNET2/Central-US the HUB Network.

Configure VNET3 –> VNET2-Local w/Gateway Subnet. Honestly, it really doesn’t matter here as long as you don’t try to point to itself you could also do VNET3 –> VNET1-Local. Whichever you point at, this is the one where DUAL (HUB) VNETs must be configured in the NetworkConfig.xml file to reciprocate with the other.

Create the Dynamic Gateway’s for VNET3 just like you did for VNET1 and VNET2. Again, wait for 30 minutes for this to get created. Once created, don’t forget to update VNET3-Local with VNET3’s Gateway IP returned from the Dynamic Gateway creation process.

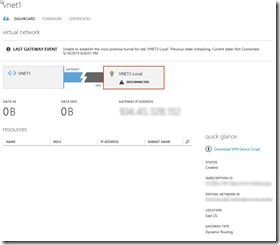

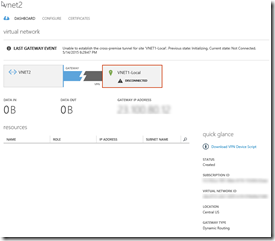

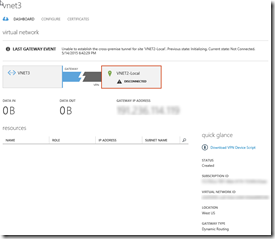

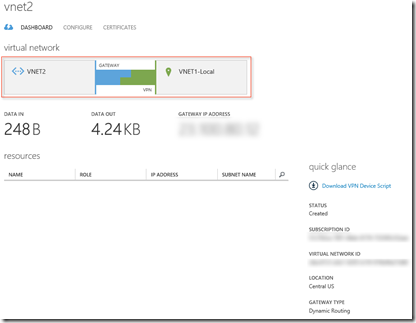

At this point you should have something like this.

Step 3

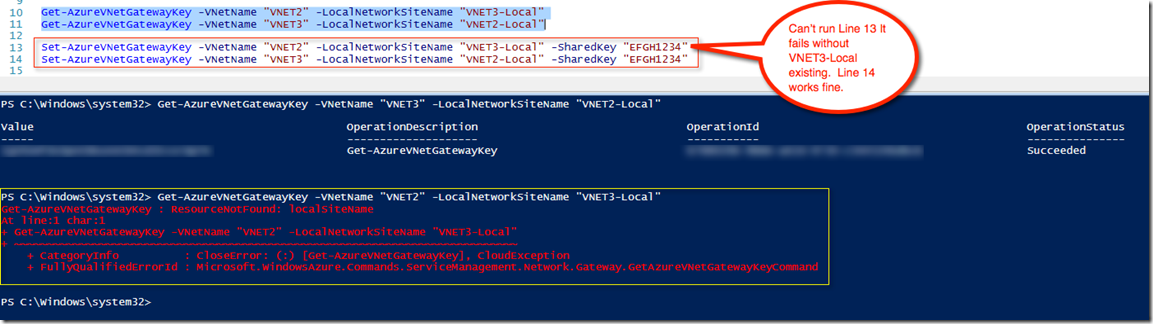

Run two PowerShell cmdlets, one for each VNET1 and VNET2 to establish bi-directional IPSEC keys replace “SharedKeyOfChoice” with a key of your choosing:

Set-AzureVNetGatewayKey -VNetName “VNET1” -LocalNetworkSiteName “VNET2-Local” -SharedKey “SharedKeyOfChoice”

Set-AzureVNetGatewayKey -VNetName “VNET2” -LocalNetworkSiteName “VNET1-Local” -SharedKey “SharedKeyOfChoice”

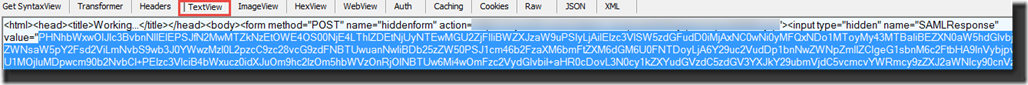

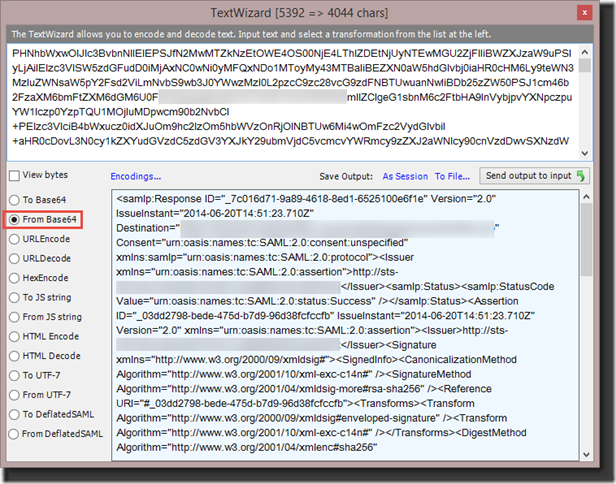

Step 4

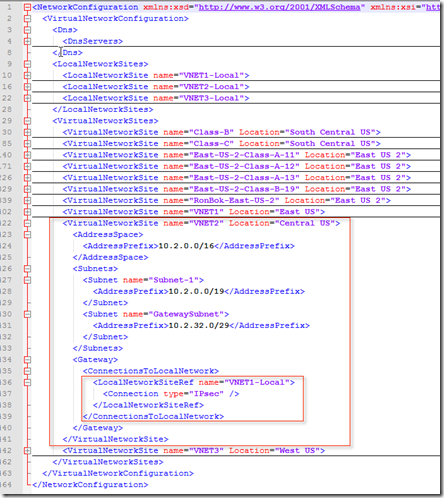

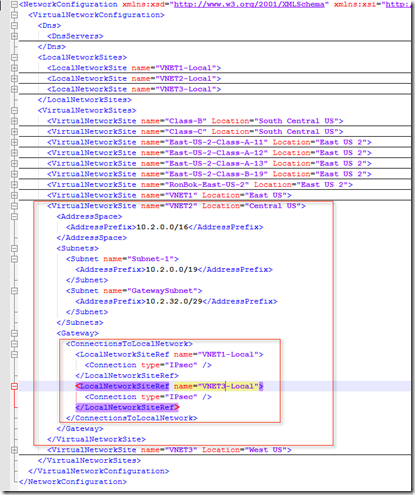

This is the more challenging part. Export the NetworkConfig.xml file and update VNET2 to include the Local Network for VNET3-Local. Then re-import the file after saving it.

I like using Notepad++ for this since it provides some nice features for editing XML.

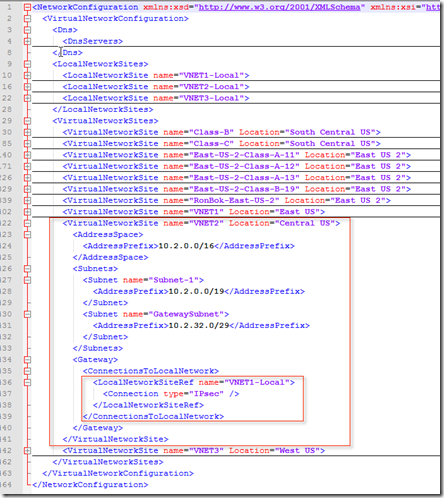

We want to go from this:

From this… –>

|

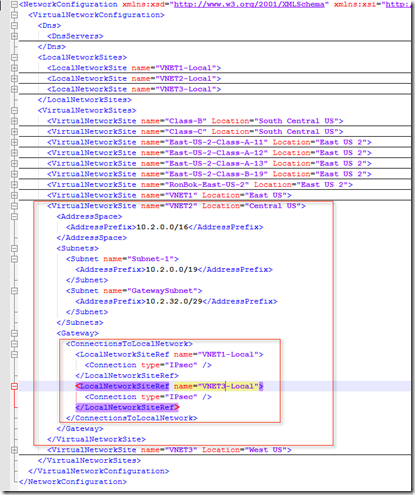

–> …to this!

|

Now re-import the NetworkConfig.xml file to update the network with this change.

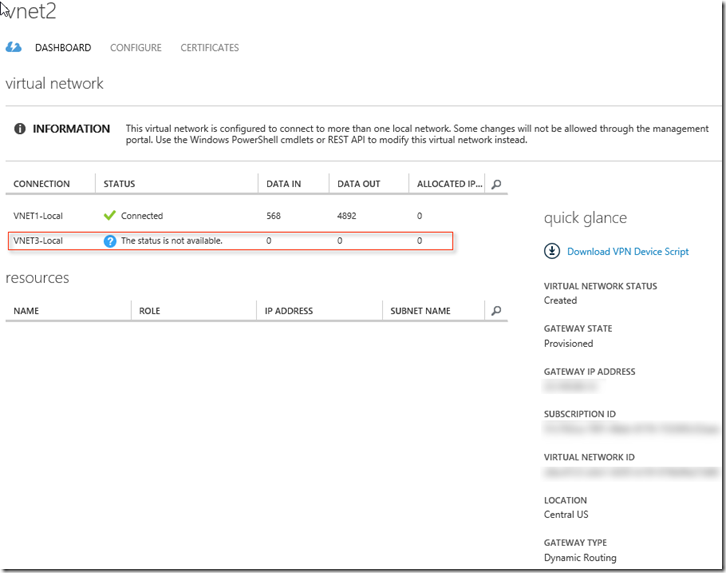

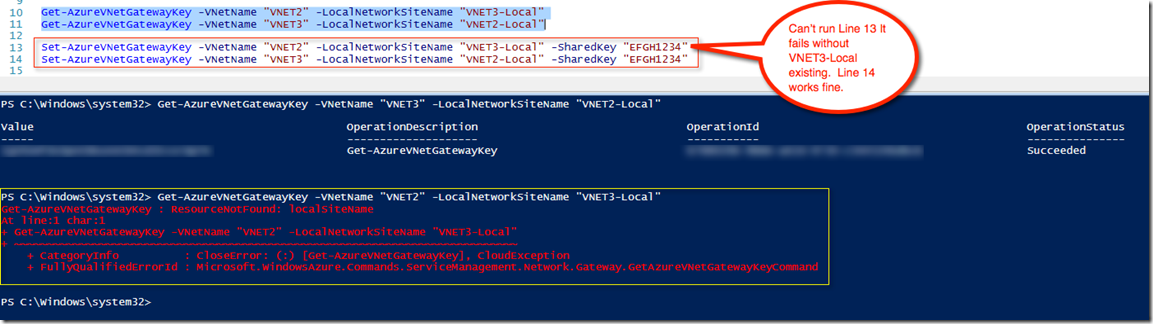

Every single Blog post out there then shows both VNETs with one connected and the other disconnected. This has NOT been my experience. Instead, you end up with the following where VNET1-Local will show connected and the newly added connection to the HUB will show “The status is not available.” This status will prevent you from establishing a connection by applying a shared IPSEC key like before.

If you get this then you MUST delete the Gateway previously established on VNET2 and re-establish it! Another 30 minute wait…

Why? Well, if you try and establish the connection by updating the IPSEC keys the PowerShell cmdlet will fail – for 100% sure because VNET3-Local does NOT exist. Basically Azure didn’t update the network.

Once you delete the VNET2 Gateway, make sure you update VNET2-Local with the new Gateway IP address.

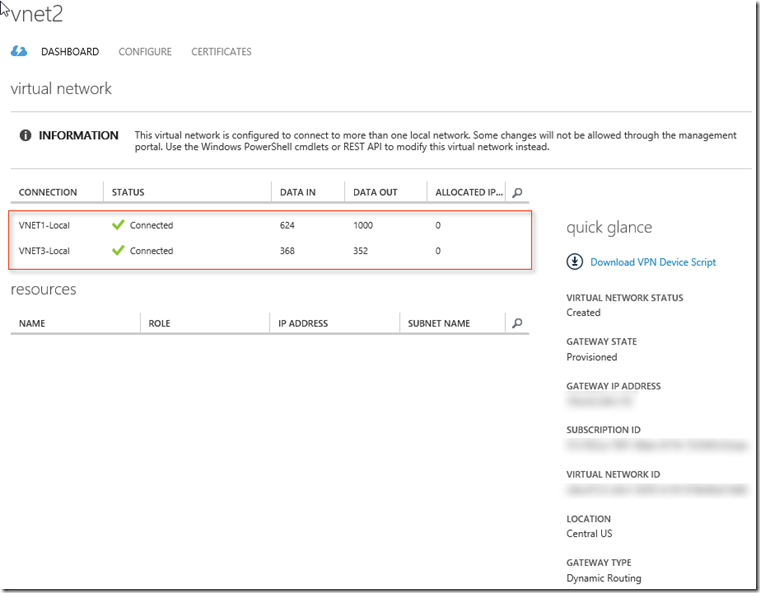

Then the above PowerShell commands WILL WORK because you should see that VNET3-Local will now show as ‘Disconnected’ rather than “The status is not available.”.

Step 5

The proof now is to place, temporally, 3 VMs one in each network and enabling ICMP IPv4 for the firewalls you should be able to ping between VNET1 and VNET2 as well as VNET2 and VNET3, but not between the machines in VNET1 and VNET3 directly.

if you have any questions about this article or need assistance please feel free to comment below.